Psychology is the science of behavior and mind. It is an incredibly extensive subject and is a relatively new science. Given that most of the progress in the field of psychology has occured in the past 150 years, there are still a lot of unexplained phenomena that researchers and scientists are working on. This alone makes the field of psychology incredibly exciting - you are not reading about a subject where all the answers are already known, but are rather facing the same hurdles and obstacles that current scientists are also facing.

On this site I will primarily be focusing on social and cognitive psychology. Social psychology aims to understand the underlying factors that make us behave the way we do in a social context, whereas cognitive psychologists are interested in how individuals process information within the brain and how stimulus is linked with response. The underlying concept of cognitive psychology is that psychological phenomena are inevitably linked to cognitive processes in the brain. Given this underlying concept, it is not surprising to find out that cognitive psychology is strongly intertwined with neurobiology. Apart from elaborating the underlying theories, a substantial part of this page will focus on the illustration of psychological experiments, their outcomes and the resulting inferences.

When psychologists, researchers and other scientists conduct studies on the characteristics of successful and accomplished people, they frequently find that these people have a high need for “cognitive closure”.

Social psychologists define cognitive closure as “the desire for a confident judgement on an issue, any confident judgement, as compared to confusion and ambiguity”.

People with a high need for cognitive closure tend to avoid ambiguous situations, disdain unreliable friends and are uncomfortable with decision making under uncertainty. They tend to have high self-discipline and are seen as leaders among their peers.

This perception of leadership comes from the fact that individuals with a strong need of cognitive closure often rely on their first instinct in a decision-making process and refrain from useless second-guessing or excessive weighing of arguments in prolonged debates.

These people like the “adrenaline-rush” of getting things done, they feel productive when they can finish one question and go on to the next. The completion of a task tends to be more important than the task itself.

In this rush for completion, these people have a tendency of being close-minded, producing fewer hypotheses and thinking less deeply about problems.

A team of researchers wrote in “Political Psychology” in 2003 that individuals with a high need for cognitive closure “may ‘leap’ to judgement on the basis of inconclusive evidence and exhibit rigidity of thought and the reluctance to entertain views different from their own”.

Simply put: Common sense and the quality of decision-making are sacrificed for the sake of task completion and the sense of being productive.

All this sounds very theoretical and surely does not have any real-world applicability.

So what if some Fortune 500 CEO doesn’t listen to his consultants and chooses to colour the new product in charcoal brown as opposed to raspberry red?

In the grand scheme of things, it doesn’t seem to matter.

I beg to differ.

Let me give you an example of the Yom-Kippur War.

This war was fought by a coalition of Arab states led by Syria and Egypt against Israel from 6th October to 20th October 1973.

The Yom-Kippur War was a consequence of the effortless victory of Israel in the Six-Day War against Egypt, Syria and Jordan in June of 1967.

Israel’s superior air-force and strategic capabilities crippled the armies of the Arabs within six days. As a result, Israel took control over certain regions of the enemy and doubled the area of land that it controlled.

This overwhelming victory completely humiliated the Arab enemies but also instilled a sense of anxiety among the Israelis. They were worried about a retaliation by the Arabs and the Israeli citizens were sure that a potential retaliation would be extremely severe given how Israel humiliated the Arabs in the Six-Day War.

Following the Six-Day War, the Arab leaders held many speeches in which they vowed to reclaim their lost territory and push Israel into the Mediterranean Sea.

As the Israeli public became increasingly anxious, lawmakers gave an order to the Israeli military to publish regular reports outlining the military developments in Egypt and Syria and indicating the likelihood of an attack by the enemy.

The aim was to give the public transparency and certainty and alleviate public anxiety. However, these reports were oftentimes inconclusive and contradictory. The volatility of the risk assessment would be extreme – one week the reports would indicate that the probability of an attack was high and the next week the same probability would drop to “very low”.

This would lead to lawmakers warning citizens to be on alert, gathering military forces to prepare for defence and then revoking all orders without any explanation. The public was constantly nervous as they feared that they could be in a war on any day and the men would have to leave their families behind and rush to the borders at a moments notice.

To solve this problem, Eli Zeira was appointed as the head of the Directorate of Military Intelligence (DMI).

A former paratrooper and known for his sophistication and political savvy, Zeira quickly rose through the ranks of Israel’s military establishment. As head of the DMI he had one primary duty: to make sure that alarms were raised only when the risks of war were real. He was supposed to calm down the public and lower their blood pressure by providing an estimate as clear and sharp as possible concerning the probability of war.

In order to achieve this goal, Zeira created his own model which became known as “the concept”. The concept was based on the premise that the Arab states were so clearly outperformed during the Six-Day War that none of them would consider an attack until they had an air force powerful enough to protect ground troops from Israeli jets and had long distance missiles capable of targeting Tel Aviv.

In the spring of 1973, Zeira’s concept was given the first test.

The Israeli government had intelligence that Egypt was gathering military troops near the border between Egypt and the Israeli-controlled Sinai Peninsula. The Israeli prime minister, Golda Meir, summoned her chief of staff and other top advisors to assess the risk of war.

The chief of staff and all the other advisors declared that there was an elevated risk of war and that Israel needed to prepare for defence immediately. When Zeira was asked for his assessment he said that he considered the probability of war “very low”, as Egypt still did not have the military capabilities to hurt Israel and an attack would effectively be suicide for Egypt.

Zeira argued that the amassing of troops along the border was merely a parade to showcase the nations strength.

Golda Meir sided with her chief of staff and ordered the military to prepare for an attack. In the subsequent month, soldiers built walls, shelters and other barricades along the border. They rehearsed battle formations and got all their military equipment up to speed. Thousands of soldiers were prevented from taking leave as the Israelis were preparing for war.

Months went by without any attack from the Egyptians and finally in July, the government revoked their public statements and ordered demilitarisation.

Needless to say, Zeira emerged from this situation with his reputation and self-confidence greatly enhanced. Despite all alarm bells going off around him, he kept his cool and ultimately gave the correct assessment. He showed the public that refraining from useless second guessing and maintaining a disciplined approach would yield the best outcome.

Utilizing his increased reputation and increased admiration in the political sphere, Zeira initiated a cultural transformation of the DMI. He stated that employees would be evaluated based on the conciseness and clarity of their recommendations and would not tolerate any “long bullshit discussions”. Once an estimate was fixed, no one was allowed to disagree with or challenge the estimate.

Fast forward to 1st October 1973 and the Israeli government again receives intelligence that the Egyptians have started bringing many convoys to the border in the middle of the night. Egypt’s military was stockpiling boats and bringing bridge-making supplies to cross the Suez Canal, which is at the border of Egypt and the Sinai Peninsula. Israeli soldiers at the border say that they are witnessing the largest build-up of military equipment by the enemy the soldiers have ever seen.

Once again, the concerned prime minister calls upon his trusted advisors to gather their assessments. Zeira, staying true to his concept and disregarding any outside noise, again stated that the likelihood of an Egyptian attack was “very low”. Once again, he argued that Egypt still had a very weak air force and lacked the long-range missiles that could threaten Tel Aviv. Unlike six months earlier, the military experts now concurred with Zeira’s assessment and told the prime minister they too believed that there was “no concrete danger in the near future”.

Over the next couple of days, the government received several more clues that indicated that an attack was looming. Egypt had now positioned 1,100 pieces of artillery along the border, and high-ranking military officers warned the Government and its advisors that they were not taking the Arabs seriously enough. Additionally, the Soviet Union was organizing emergency airlifts for Soviet diplomats in Egypt and Syria and instructed all Russian families to rush to the airport and leave the country.

Zeira, still confident about his original assessment, argued that the Russians did not understand the Arabs as well as Israel did and were mistakenly evacuating their people from Egypt and Syria. Zeira was frozen on his decision and unwilling to reconsider his position in light of new evidence. The new evidence would have created a more ambiguous, uncertain situation for him, and he therefore tried to, unconsciously, avoid it.

Early morning on the 6th October, five days after the initial evidence came up, the government got information from a well-connected source that Egypt and Syria would invade Israel by nightfall. At last the military issued a call-up for the military reserve force. The 6th October was the first day of the holy Jewish Yom-Kippur holiday and families were busy praying at home when the news hit.

By this time, there were more than 150,000 enemy soldiers along Israel’s borders, ready to attack from the Sinai Peninsula and the Syrian-Israel border in a two-front war.

Just a couple of hours after the Israel military called for the soldiers to get prepared, thousands of Egyptian soldiers had already crossed the border and were attacking the outskirts of Israel. Not even 24 hours later, Egypt and Syria had hundred thousands of soldiers inside Israel’s territory as Israel was struggling to respond.

Long story short, Israel’s superior military capabilities helped them repel the invasion and pushed the enemies back out of Israel. However, this came at great cost. More than 10,000 Israelis were killed or wounded and around 30,000 Egyptians and Syrians died in this war.

It is certain that the number of Israeli casualties would have been a fraction of the actual number, had Israel listened to the warning signs and prepared for an attack as soon as the first clues arose.

Of course, Zeira was heavily blamed.

He was eager to calm down the public and relieve them from their anxiety. However, his eagerness to provide a confident judgement and avoid any ambiguous facts that did not conform with his original view, almost cost Israel its life.

Now, what is the takeaway from this little story?

Whenever you are in a decision-making process, be aware of the need for cognitive closure by certain people.

Make sure that the completion of the task is not more important than the task itself. Encourage thoughtful disagreements, be aware of your desire to make decisions quickly and make sure to always consider differing opinions and evaluating them based on their merits.

We are living in exciting times full of technological innovation and developments. We are in the process of developing driverless cars, using reusable rocket technology to go to space and are making significant improvements in the application of Artificial Intelligence. Despite all these amazing developments, we still know surprisingly little about something that has been with us for as long as humans have existed: consciousness.

Lots of research has been conducted in the past few decades aiming to understand how our consciousness works. It is ironic that we have made such significant progress in other fields of science but are still struggling with the study of consciousness, something we have an abundance of empirical data of. Some scientists say this is due to the fact that consciousness is not suitable for scientific investigations because the very definition of consciousness is not clear. There is no fixed scientific definition of consciousness and it is not easy to obtain one, since consciousness is not observable. However, John Searle in his 1998 paper titled “How to study consciousness scientifically” argued that one must distinguish analytic definitions, which attempt to explain the essence of a concept, from common-sense definitions, that simply clarify what one is talking about. In that sense, the common-sense definition of consciousness “refers to those states of sentience and awareness that begin when we wake up from a dreamless sleep and continue through the day until we fall asleep again”. The definition and understanding of consciousness has undergone a few changes in the past decades and has also split into a few opposing views.

Most of the research conducted on consciousness has been focused on our awareness of visual objects, therefore focusing on visual consciousness. The reason for this is that researchers can easily control what is presented to participants and can afterwards directly ask them for a verbal report of their visual experience. The main limitation with this approach was articulated by cognitive psychologist Victor Lamme: “You cannot know whether you have a conscious experience without resorting to cognitive functions such as attention, memory or inner speech.” Therefore, if a participant fails to report a conscious perception, it might only be due to the failure of his cognitive functions.

However, failure in cognitive functions are not the only issues that could arise when conducting experiments to assess people’s consciousness. In post-decision wagering situations for example, participants make a decision (e.g. whether a displayed object is light or dark blue) and then place a wager.

If the participant’s decision is correct, they earn the amount of money wagered, but if they fail to make the correct decision, they loose their money that they wagered. Common sense dictates that wagers should be bigger and more successful if participants’ conscious awareness is high and wagers should be small and imprecise when conscious awareness is low (e.g. colour blindness).

However, several problems arise when the amount of the wagers are the index by which one measures conscious awareness. First of all, using cash as a medium raises the questions of the personal value that money has to each participant.

While a wealthy participant may be indifferent towards small amounts of money, his betting behaviour will probably differ from one of a participant who is personally living from paycheck to paycheck. Furthermore, a natural human tendency is loss aversion and this tendency definitely impacted the betting strategies of the participants.

Another method for studying consciousness looks at consciousness from a neurobiological standpoint. It has been argued that in order to get a better understanding of consciousness, it is essential to look at what happens in certain brain areas when we obtain behavioral indicators of conscious awareness.

Lamme even argued that we should think of consciousness in neural terms instead of behavioral ones – essentially stating that consciousness is a completely biological and physical feature of the organism rather than some abstract concept.

Lamme focused on visual consciousness and found that when subjects where presented with a visual stimulus it led to an automatic processing of the stimulus at different levels of the visual cortex.

The forward sweep refers to the visual stimulus “travelling” through the visual cortex through successive levels. More specifically, the visual stimulus goes through the primary visual cortex, the V1, which receives the sensory inputs from the thalamus and goes through the secondary visual cortex, known as V2, which is the second major area of the visual cortex. You can imagine this forward sweep as a tennis ball which is being thrown vertically into the air.

This process takes about 100-150 milliseconds. After the forward sweep, the recurrent processing starts. Recurrent processing basically involves the feedback from the higher to the lower areas, enabling strong interactions between these different areas. Essentially, recurrent processing is the tennis ball falling back into your hands due to gravity.

The cognitive psychologists Lamme, Fahrenfort and Scholte in 2007 observed that conscious experience seemed to be directly linked to recurrent processing. They set up an experiment taking advantage of the masking effect.

The masking effect basically states that when a first visual stimulus is followed by a second visual stimulus, the second one prevents the conscious perception of the first. Lamme, Fahrenfort and Scholte asked subjects to indicate whether a given target figure (e.g. a specific coloured circle) had been presented or not. The subjects were exposed to both masked and unmasked conditions, meaning that in some of the cases, a second visual stimulus appeared right after the first one. During the entire experiment the subjects brain activity was recorded by placing electrodes on their scalps to measure the electrical activity of the brain.

The Electroencephalogram (EEG) findings showed that there was no evidence of recurrent processing in the masked condition, meaning that when subjects were not consciously aware of the target stimulus there was also no recurrent processing going on in their visual cortex.

Additionally, in 1999 several researchers obtained similar results that underlined the importance of recurrent processing in being consciously aware.

Corthout et. al used a special kind of magnetic stimulation (known as TMS) to briefly disrupt the functions of the primary visual cortex (V1) through magnetic pulses at different times after presenting the subjects to the visual stimulus. Their findings showed that the conscious perception of the stimulus was eliminated when the TMS was applied around 100 milliseconds after the presentation of the stimulus, whereas conscious perception did not change when the TMS was applied less than 80 milliseconds after the stimulation.

Since the recurrent processing starts at around 100 milliseconds after stimulus presentation, when conscious perception was eliminated, the magnetic impulses disturbed the recurrent processing. On the other hand, applying the magnetic impulses before 80 milliseconds implies that only the feedforward sweep would be disrupted which, it seems, did not have any effect on conscious perception. Therefore, this experiment underlines the hypothesis that recurrent processing is required for conscious perception.

These experiments and their findings are making several scientists believe that we must change the way we think about consciousness. Rather than being some abstract concept, it should be seen as a physical process just like digestion or photosynthesis.

Understanding consciousness is a particularly complex endeavour as it requires significant knowledge in several areas such as biopsychology, neuroscience, philosophy and partly artificial intelligence.

A breakthrough in this area would bring us significantly closer to understanding the human brain and to understand the cause-effect relationship of our species. While research in this area is very important, it could bring about several philosophical issues related to free will and the concept of determinism.

Several studies have already shown that for certain actions, our body is already carrying out neurochemical processes before we even make the conscious decision to carry out the action. Therefore, our future findings in the field of consciousness will be vital to understand the real control we have over our actions.

Gender differences have been a long debated subject and are often prone to misunderstandings and misinterpretations. In this article however, I want to write about research that has been conducted on typical sex differences from a neuroscientific standpoint.

Simon Baron Cohen, a psychologist and a professor at Cambridge University, explained that when looking at the neuroanatomy, the structure and composition of the brain, of boys and girls one can find major structural differences. Scanning the brains of various boys and girls revealed that, on average, the male brain is 8% larger than the female brain. This is a volumetric difference and doesn’t necessarily lead to any inference, however it is an occurrence that has been found even with babies as young as 2 weeks old.

Another difference between the brains can be deduced by looking at the human brain in terms of post-mortem tissue. This reveals that the average male brain has 30% more connections, synapses, between nerve cells than the average female brain.

Cohen could also dissect the brain to look at specific brain regions. The amygdala, which serves the processing of memory, decision–making and emotional responses, tends to be larger in the male brain on average. On the other hand, the brain region known as planum temporale, an area involved in language, is larger in the female brain.

Cohen states that the benefit of having these findings are that these differences exist and are rooted in biology and therefore there is less room for misinterpretation or disagreement.

These differences in neuroanatomy indicate differences in the psychology of men and women. Research has shown that girls develop and exhibit empathy much faster and earlier than boys and that boys have a stronger drive to understand the underlying mechanisms of how the world works. Boys are more fascinated with “systems”, as Cohen calls it, these could be mechanical systems like a computer or natural systems like the weather or an abstract system like mathematics.

But where do these sex differences come from?

Cohen and his lab were of the strong opinion that testosterone could be a major driver of these differences and therefore conducted various experiments and analysis with this concentration.

In the year 2000 they published a study involving new-born babies. The main aim of this study was to investigate whether the differences in the mind and behaviour of humans are solely due to cultural, postnatal experiences or if biology also contributes to this difference.

For this experiment, Cohan and his team studied 100 babies that were just 24 hours old. These babies were presented with two objects: a human face or a mechanical mobile phone. The team looked to see whether babies aged one day old looked longer at the human face, a social stimulus or looked longer at the mechanical mobile. The result of this experiment showed that boys seemed to look longer at the mechanical stimulus and girls seemed to look longer at the social stimulus. This result was a strong case for the hypothesis that biological factors were indeed a contributing factor to sex differences. After just one day, a behavioural pattern had been established that showed that girls were on average more inquisitive about people and boys, on average, being more oriented to the physical environment of the world.

Now that it has been established, that behavioural differences also seem to have biological roots, we should understand what these biological roots are and what hormones or molecules play a key part in this process.

Cohan and his team choose to investigate testosterone, as animal research has shown that directly before birth there is a sudden spike in the production of testosterone. Testosterone is suddenly generated in high quantities and then drops off again around birth and the researchers argued that this production of testosterone had “permanent and organizing effects” on the development of the brain. Several experiments on the effects of testosterone levels have been conducted on rats.

For example, if you inject extra testosterone into a female rat either at birth or during pregnancy and then investigate her behaviour postnatally, one finds that her behavioural patterns resemble that of a male rat. One way to show this is to let the rats run through a maze. Generally speaking, male rats find their way out of the maze much faster than female rats due to their ability to learn special routes quicker. Female rats that were injected with testosterone found their way through the maze much quicker than “normal” female rats. This supports the hypothesis that testosterone “masculinizes” the brain and behaviour.

These findings were coherent with other research and the effects of changes in testosterone levels were very clear at that point in other species. But until then no one had found a way to test it in humans. Cohan and his team eventually found a way to use on women in pregnancy who were having a procedure known as amniocentesis. This is where a needle is entered into the womb of a pregnant women, extracting the amniotic fluid that surrounds the womb. This process of extracting the amniotic fluid that surrounds the baby is called amniocentesis.

Normally, this process is used when there is a suspicion that the baby may suffer from Down syndrome, and the doctor analyses the amniotic fluid for chromosomal irregularities. Cohan and his team got the approval of women who were anyway having amniocentesis, to take some of the fluid and analyse it for levels of testosterone. The study would consist of deep freezing the amniotic fluid and, when the baby was born, trying to find a correlation between the levels of testosterone in the amniotic fluids and the infants behaviour.

Specifically, the researchers wanted to see whether the scale of testosterone levels (low, average, high) had anything to do with individual differences postnatally – for example, in rates of language development, in how sociable children are and on other dimensions.

Cohan and his team invited the babies every year since their birth and had a total of 500 babies in their experimental population.

On their second birthdays the researchers looked at language development. They did this by asking the parents to fill in a checklist of how many words their child knows and how many words their child could produce.

At the age of two, there were children who had a total vocabulary of 10 or 20 words and there were some children who were extremely chatty and had up to 600 words in their vocabulary. Looking back at the prenatal testosterone level, one could see that there was a significant negative correlation between prenatal testosterone and the size of the vocabulary – the higher the child’s prenatal testosterone level in the amniotic fluid the smaller their vocabulary was at two years old.

Another study was conducted with the same children when they were 4 years old. Now the researchers where looking at the empathy of the infants. This was measured by the judgement of their parents, the children taking empathy tests and information on how easily the infant mixes socially at school.

Again, a similar result emerged, the more prenatal testosterone level was measured, the less empathy, on average, the infant displayed at the age of 4.

These behavioural studies indicate that hormone levels that are determined prenatally still have significant influence on the development of a child’s behaviour and their brain. This could raise the question regarding determinism: Are we really free in our development if our development is influenced by prenatal hormone levels that we have no control over. This is a question that I will be writing about more in future.

Stay tuned!

Judgement and decision making are two research fields that heavily rely on statistical judgements. As many judgements in our life are based on an abundance of, often ambiguous, information, we necessarily deal with subjective probabilities rather than certainties. This is an important reason why decision making is so difficult in real life, because we live in a world filled with uncertainties. For example, you might want to choose a career path that pays well. However, there is no guarantee that this same job will pay well in 25 years down the road.

The optimal way of decision and judgement making generally does not apply well to the nature of human reasoning. This is because oftentimes we don’t have access to all the information that are relevant for making a decision. Secondly, humans might exaggerate the relevance of some chunks of information and weigh this information more heavily in their decision-making process. Lastly, humans are rarely in a neutral emotional state while making a decision. Being anxious or depressed can adversely affect the decision-making process as it makes it difficult for the individual to think clearly about their available options.

Much of the research conducted on judgement has its origin in our everyday life, where we may revise our opinions whenever a new piece of information presents itself. For example, suppose that you believe that someone is lying to you. Afterwards you meet a person that supports their story, then probably your level of confidence in your original belief is going to decrease. These changes in beliefs can be expressed as statistical probabilities. Say that originally you were 85% confident that the person was lying to you, however when you speak to the other person, this probability is reduced to 60%.

The mathematician Thomas Bayes developed a formula that could calculate the impact of new evidence on a pre-existing belief. It basically tells if you have a certain belief and encounter a new piece of information that affects that belief, to what extent this new information should have an impact on your updated belief. Bayes focused on situations that had only two hypotheses’ or beliefs (A is lying vs A is not lying).

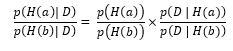

According to Bayes theorem we need to consider the prior odds, that is the relative probability of both beliefs before the new piece of information was received and the posterior odds, that is the relative probabilities of obtaining the new information under each hypothesis. Bayes evaluates the conditional probabilities of observing the data D, given that hypothesis A is correct, written as p(D I H(a)) and given that hypothesis B is correct p(D I H(b)). Bayes formula itself can be expressed as follows:

On the left side of the equation, the relative conditional probabilities of hypotheses A and B are shown, which is essentially what we want to figure out. On the right side we first have the prior odds of each hypothesis being correct before the data is collected. Then, we have the posterior odds that involve the probability of the data given each hypothesis.

If this sounds very abstract to you (like it did to me), then you can either take a break and google "how to become smarter" (like I did) or consider the following taxi-cab problem developed by Kahneman and Tversky (1980):

A taxi-cab was involved in a hit-and-run accident one night. Two cab companies, the Green and the Blue, operate in the city. You are given the following data: (a) 85% of the cabs in the city are Green, and 15% are Blue, and (b) in court a witness identified the cab as a Blue cab. However, the court tested the witness’s ability to identify cabs under appropriate visibility conditions. When presented with a series of cabs, half of which were Blue and half of which were Green, the witness made the correct identification in 80% of the cases, and was wrong in 20% of cases.

What was the probability that the cab involved in the accident was Blue rather than Green in percent?

I will refer to the hypothesis that the cab was green as H(g) and the hypothesis that it was blue as H(b). The prior probabilities are 0.85 for H(g) and 0.15 for H(b). The probability of the witness saying that the cab was blue when it really was blue, that is p(D I H(b)), is 0.80. The probability that the witness says that the cab is blue when it is actually green, that is p(D I H(g), is 0.20. Entering these values into the formula above shows:

![]()

This tells us that the odds ratio is 12:17 or a 41% probability that the taxi cab involved in the accident was blue versus a 59% chance that the cab was green.

Kahneman and Tversky gave this problem to various ivy league students and asked for their subjective probabilities. They found that most participants ignored the base-rate information regarding the relative frequencies of green and blue cabs in the city and instead relied more on the testimony of the witness. A large part of the respondents maintained that there was a 80% likelihood that the car was blue. As seen above however, the correct answer is 41%.

Empirical evidence suggests that people take much less account of the prior odds or the base-rate information than they should. Base-rate information was defined by Koehler as “the relative frequency with which an event occurs or an attribute is present in the population”. There have been many instances in which participants simply ignored this base-rate information, even though it should, according to the Bayesian theorem, be a significant factor in the judgement making process.

Kahneman and Tversky altered the situation in the taxi-cab problem slightly, so that in this new situation participants actually did take base-rate information in to consideration. They changed part (a) of the original problem to:

Although the two companies are roughly equal in size, 85% of cab accidents in the city involve green cabs, and 15% involve blue cabs.

In this scenario, participants responded with a likelihood of 60% that the cab was blue. The reason for this better probability estimation is that there is a more concrete causal relationship between the accident history and the likelihood of there being an accident. This causal relation was missing in the original problem, as participants didn’t consider the population difference for the two cab companies to be directly linked to the accident. Therefore, psychologist concluded that base-rate information may be ignored in assessments, however various factors, such as the presence of casual relationship, can partially reverse this behaviour.

I want to demonstrate one more example that provides additional insight into the impact of base-rate information on judgements. I urge the reader to try and figure the following problem out by himself, without reading further on.

The following problem was developed by Casscells, Schoenberger and Graboys in 1978 and was presented to faculty staff and students of Harvard Medical School:

If a test to detect a disease whose prevalence is 1/1000 has a false positive rate of 5%, what is the chance that a person found to have a positive result actually has the disease, assuming that you know nothing about the person’s symptoms or signs?

The base-rate information is that 999 out of 1000 do not have the disease and only one person truly is infected with it. The false positive rate tells us that 50 out of 1000 people would be tested positive, however since only one out of those 50 people actually has the disease the chance that a person has the disease given a positive test result is 1 out of 50, or 2%.

Only about 18% of the participants gave the correct answer. Around half of the respondents didn’t take the base-rate information into account and gave the wrong answer of 95%. However, if the experimenters asked the participants to create an active pictorial representation of the information given, 92% of the respondents gave the right answer. More specifically, the participants were asked to colour in different squares to represent who had the disease and who didn’t. Thus, it seems that a visualization of information leads to a more accurate judgement than if the information is simply processed mentally.

Kahneman and Tversky conducted lots of research on why we often fail to make use of base-rate information. During their work, they came up with several heuristics that could explain this phenomenon.

1) Representativeness heuristic

When people use this heuristic, “events that are representative or typical of a class are assigned a high probability of occurrence. If an event is highly similar to most of the others in a population or class of events, then it is considered to be representative”. This heuristic is used when people are asked to decide the probability that a person or an object X belongs to a class or process Y. Kahneman and Tversky carried out a study in which they presented participants with the following scenario:

Linda is a former student activist, very intelligent, single and a philosophy graduate

Participants where then asked to assign a probability that Linda was (a) a bank teller (b) a feminist or (c) a feminist bank teller. The estimated probability among the participants that Linda was a feminist bank teller was higher than the probability that she was a bank teller. This obviously makes no sense, since the category “feminist bank teller” is a subset of the category “bank teller”. The description of Linda matched the characteristics that the participants believed a feminist bank teller would have and they therefore based their probabilities solely on the matching of these traits. The fact that overall the base-rate for bank tellers is larger than that of feminist bank tellers was completely ignored.

2) Availability heuristic

This heuristic describes the phenomenon of estimating the frequencies of events based on how easy it is to retrieve relevant information from the long-term memory. Tversky and Kahneman discovered this heuristic by asking participants the following question:

If a word of three letters or more is sampled at random from an English text, is it more likely that the word starts with “r” or has “r“ as its third letter?

Nearly all of the participants stated that it was more likely that a word starting with “r” was picked at random. In reality, the opposite is true. However, the participants could retrieve words starting with “r” more easily than thinking of words that had “r” in the third position. As a result, participants made the wrong judgement about the relative frequency of the two classes of words.

Another example that supports the availability heuristic is that people tend to think of murders to be more likely than suicides. Again, in reality, the opposite is the case. However, the publicity of murders incurred by extensive media reports and news stories make these events more available in the mind of people, and therefore more retrievable.

The last theory that I want to present is the Support theory developed by Tversky and Koehler around 1994. The gist of this theory is that any given event appears to become estimated as more likely, the more explicitly it is described.

For example, how likely would you say it is that you will die on your next summer holiday? Unless you are planning to take the dreaded bus from Manali to Leh in India, I hope not very. However, the probability of that event occurring seems higher if it were described in the following way: What is the probability that you will die on your next summer holiday from a disease, a sudden heart attack, an earthquake, terrorist activity, a civil war, a car accident, a plane crash or from any other case?

According to the support theory, the estimated probability for this description of the same event would be significantly higher. This is due to the fact that human usually do not assign probabilities to certain events but rather to the description of those events and that the judged probability of an event depends on the explicitness of its description.

But why is it that a more explicit description of an event will be regarded as having a greater probability than the exact same event described less in detail. According to Tversky and Koehler there are two main reasons:

1. “An explicit description may draw attention to aspects of the event that are less obvious in the non-explicit description”

2. “Memory limitations may mean that people do not remember all of the relevant information if it is not supplied

Further evidence that is consistent with the assumptions of the support theory was provided by a group of experimental psychologists in 1993. In the experiment, some participants were offered hypothetical health insurance covering hospitalisation for any reason and the other participants were offered the health insurance covering hospitalisation for any disease or accident. Sure enough, even though the offers are the same, the second group of participants were willing to pay a higher premium for the insurance. Presumably, the explicit mentioning of diseases and accidents made it seem more likely that hospitalisation would be required.

Kahneman, Tversky and many other psychologists have conducted significant research on general heuristics that undermine our judgements in many different contexts. Being aware of these biases and heuristics can be of significant practical importance as it allows you to understand your judgement making process more deeply.